The Kafka connector maps channels to Kafka topics. Channels are connected to message backends using connectors. This is a good example of how to integrate a Kafka consumer with another downstream, in this example exposing it as a Server-Sent Events endpoint.

ksql kafka sql streaming started getting streams apache knoldus blogs

Kafka topic explorer, viewer, editor, and automation tool. Kafka Magic Search Query. Your query can be pretty much any JavaScript statement, or a set of statements. The only requirements is that at some point it must return a boolean value specifying if this is the message you are looking for.

Is there an elegant way to query a Kafka topic for a specific record? The REST API that I'm building gets an ID and needs to look up records One approach is to check every record in the topic via a custom consumer and look for a match, but I'd like to avoid the overhead of reading a bunch of records.

Learn more about the kafka-topics tool. How NameNode manages blocks on a failed DataNode. Use Case 1: Registering and Querying a Schema for a Kafka Topic.

Learn how to run Kafka topics using Kafka brokers in this article by Raúl Estrada, a programmer since 1996 and a Java developer since 2001. The real art behind a server is in its configuration. This article will examine how to deal with the basic configuration of a Kafka broker in standalone mode.

...Kafka Applications that need to read data from Kafka use a KafkaConsumer to subscribe to Kafka topics and receive messages from these Get full access to Kafka: The Definitive Guide and 60K+ other titles, with free 10-day trial of O'Reilly. In order to understand how to read data from

kafka streams stream architecture partitions tasks processing features

Apache Kafka is an amazing system for building a scalable data streaming platform within an organization. It's being used in production from all the Today, I'll be discussing how one can do that in regards to Apache Kafka and its core data structure: a topic. As most engineers who have

apache-kafka Consumer Groups and Offset Management How can I Read Topic From its Beginning. There are multiple strategies to read a topic from its beginning. To explain those, we first need to understand what happens at consumer startup.

kafka ksql ksqldb

kafka-console-consumer --bootstrap-server localhost:9092 --topic latest-product-price --property --property : --from-beginning p3:11$ p6:12$ p5:14$ p5:17$. As you see records with duplicated keys are removed.

Kafka Streams is built as a library that can be embedded into a self-contained Java or Scala application. It allows developers to define stream processors that perform data transformations or aggregations on Kafka messages, ensuring that each input message is processed exactly once.

ksql pipeline kafka flow streams deploying figure implementations gradle managing

This article describes how to Create Kafka topic and explains how to describe newly created and all existing topics in Kafka. To create a Apache Kafka topic by command, run and specify topic name, replication factor, and other attributes.

When querying Kafka topic data with SQL such as. SELECT * FROM topicA WHERE transaction_id=123. If the Kafka topic contains a billion 50KB messages - that would require to query 50 GB of data. Depending on your network capabilities, brokers' performance, any quotas

1. Overview. In this quick tutorial, we're going to see how we can list all topics in an Apache Kafka cluster. First, we'll set up a single-node Apache Kafka and Zookeeper cluster. Then, we'll ask that cluster about its topics. 2. Setting Up Kafka.

Data in Kafka is organized into topics that are split into partitions for parallelism. Each partition is an ordered, immutable sequence of records, and can be thought of as a structured commit log. Producers append records to the tail of these logs and consumers read the logs at their own pace.

How to Create Kafka Topics. Kafka topics can be created either automatically or manually. A Kafka topic can be cleared (also referred to as being cleaned or purged) by reducing the retention time. For instance, if the retention time is 168 hours (one week), then reduce retention time down to

Kafka messages (or records, in its terminology) are uniquely identified by the combination of the topic name, the partition number and the offset of the record. This is effectively the primary key of the record, if you want to use a database

prague kafka sculpture head giant moving strange relationship perfectly

Interactive Queries allow you to leverage the state of your application from outside your application. To query the full state of your application, you must connect the various fragments of the state This example shows how to configure and run a Kafka Streams application that supports the discovery

trifecta kafka json

In of Confluent Platform the REST Proxy added new Admin API capabilities, including functionality to list, and create, topics on your cluster. Check out the docs here and download Confluent Platform here.

Command : --list --zookeeper localhost:2181. You can also use kafka-topics command with a properties file when connecting a kafka broker secured by password. To do this first create a properties file like the below one and then issue the kafka-topics command.

Introduction to Kafka Topic Management. Ic-Kafka-topics is a tool developed by Instaclustr that can be used to manage Kafka topics using a connection to a Kafka broker. Ic-Kafka-topics is based on the standard Kafka-topics tool, but unlike Kafka-topics, it does not require a zookeeper

To read from Kafka for streaming queries, we can use function Kafka server addresses and topic names are required. Spark can subscribe to one or more topics and wildcards can be used to match with multiple topic names similarly as the batch query

Kafka topics are divided into a number of partitions, which contains messages in an unchangeable sequence. Kafka optionally replicates topic partitions in case the leader partition fails and the follower replica is needed to replace it and become the leader.

Topics are the core component of Kafka. Let us explore what are Topics and how to create, configure, List and Delete Kafka topics. Kafka topics are always multi-subscribed that means each topic can be read by one or more consumers. Just like a file, a topic name should be unique.

Introduction to Kafka Topic. The Kafka, we are having multiple things that are useful for real-time data processing. How Kafka Topic Works? It is useful to store the records or messages on a specific topic. For the proper data manipulation, we can use the different Kafka partition.

kafka franz

cloudera kudu hdfs nifi

How does Kafka work in a nutshell? Kafka is a distributed system consisting of servers and clients The Kafka Streams demo and the app development tutorial demonstrate how to code and run such a Provided support to query stale stores (for high availability) and the stores belonging to a

--zookeeper localhost:2181 --delete --topic mytopic. Get number of messages in a topic ??? Get number of messages in a Topic GetOffsetShell only worked in PLAINTEXT, how to achieve this in SASL_PALINTEXT?

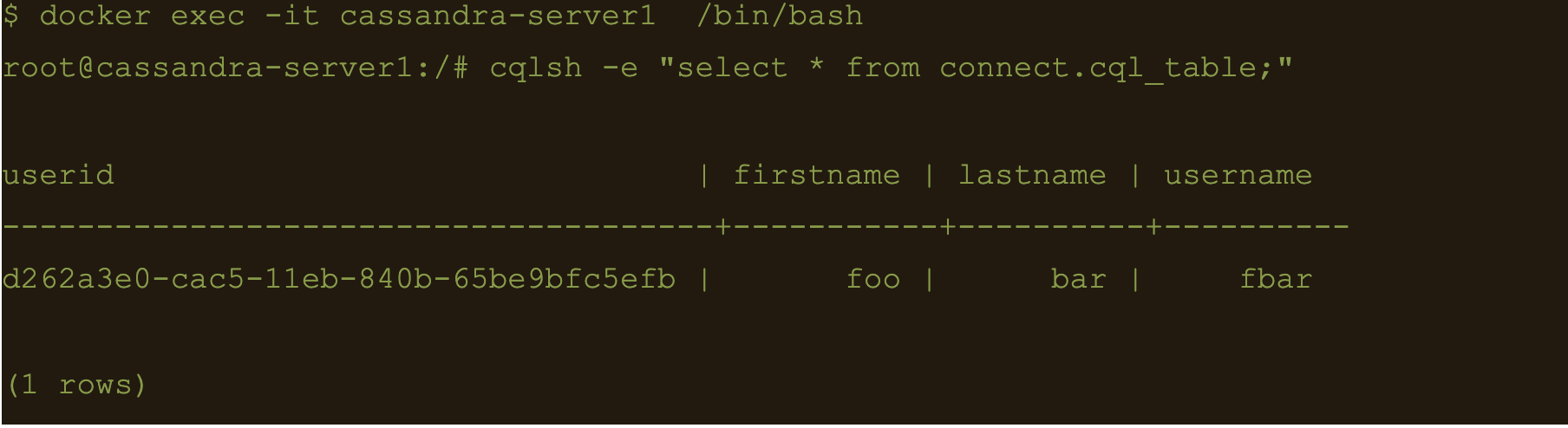

cql digitalis cassandra avro

Kafka Training: Using Kafka from the command line starts up ZooKeeper, and Kafka and then uses Kafka command line tools to create a topic, produce some messages Kafka also provides a utility to work with topics called which is located at ~/kafka-training/kafka/