requirements template word specification excel templates ms visio specifications write document brd functional matrix writing data microsoft traceability srd cart

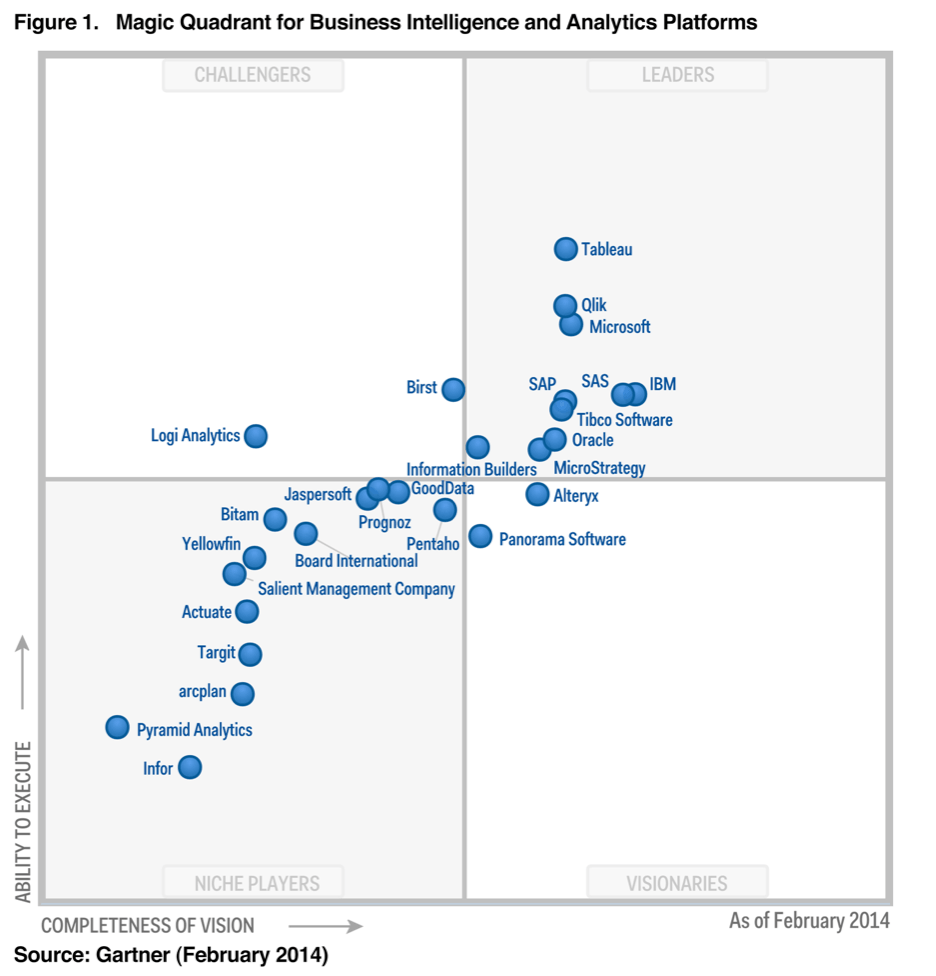

gartner quadrant magic bi tools analysis cdnl tblsft comment

Extract, Transform, and Load (ETL) operations are used to prepare data and load it into a data destination. Apache Hive on HDInsight can read in unstructured data, process the data as needed, and then load the data into a relational data warehouse for decision support systems.

Testing the ETL process is different from how regular software testing is performed. It's virtually impossible to take the process apart and do unit A test strategy is a document that lists information about why we're testing, what methods we're going to use, what people or tools we will need for

Testing â Scenarios, ETL Test Scenarios are used to validate an ETL Testing Process. The following table explains some of the most common scenarios and test-cases that are used by E. ... The mapping document should have change log, …

ETL Certification the end to end process. Nationally Recognised Testing Laboratory (NRTL). ETL Certification the end to end process. thorough explanation of the true legal requirements behind third party Authorisation to Mark - a document that the applicant receives on completion of his

ETL is the process of extracting data from a variety of sources, formats and converting it to a single format before putting it into database. Have you ever wondered how data from many sources were integrated to create a single source of information? Batch processing is a sort of collecting data

ETL (Extract, Transform, Load) is an automated process which takes raw data, extracts the information required for analysis, transforms it into a format that can serve business needs, and loads it to a data warehouse. ETL typically summarizes data to reduce its size and improve performance

ETL Process Overview. As seen above, Informatica PowerCenter can load data from various sources and store them into a single data warehouse. We have completed the designing of the how the data has to be transferred from the source to target. However, the actual transfer of data is still yet

Testing is a way to perform validation of the data as it moves from one data store to another. The ETL Tester uses a Mapping Document (if one exists), which is also known as a source-to-target map. This is the critical element required to efficiently plan the target Data Stores. It also defines the Extract, Transform and Load (ETL) process.

is a type of data integration that refers to the three steps (extract, transform, load) used to blend data from multiple sources. It's often used to build a data this process, data is taken (extracted) from a source system, converted (transformed) into a format that can be analyzed, and stored (loaded) into a data warehouse or other system.

ETL processes revolutionize business analytics and automate manual data operations. Learn how you can transform your business with an ETL In this article, we've broken down the ETL process, explained how it works, and demonstrated how an ETL process can help you streamline

process documentation template training improvement learning overview templates mapping processes diagrams step management defined flow map corporate organization write analysis

This ETL tutorial will explain what is ETL process and what it mean in a technical way. ETL is a very important concept that every IT professional

In computing, extract, transform, load (ETL) is the general procedure of copying data from one or more sources into a destination system which represents the data differently from the source(s) or in a different context than the source(s)...

pricing procedure sap maintain overview spro purchasing process define management materials transaction guru99 settings

Etl Process Documentation Template - How. › Get more: Etl documentation toolsShow All. What tool can I use to document ETL processes? - Quora. How. Details: Answer (1 of 3): Power center Informatica can be used to create useful documentation for an ETL

10, 2018 · The initial step of any data process, including ETL, is data mapping. Businesses can use the mapped data for producing relevant insights to improve business efficiency. During the data mapping process, the source data is directed to the targeted database. The target database can be a relational database or a CSV document — depending on the ...

ETL can be termed as Extract Transform Load. ETL extracts the data from a different source (it can be an oracle database, xml file, text file, xml, etc.).Then transforms the data (by applying aggregate function, keys, joins, etc.) using the ETL tool and finally loads the data into the data warehouse

Learn how the ETL process works and its significance for businesses that want to get more value through its implementation. Terms like automated ETL process, data mart, data lake, or warehousing would've been incomprehensible to most people, or worse, a buzzword people use

The ETL process allows businesses to employ previously unused or underused data to improve their How will you collate all the data from different locations, formats, and structures into a unique ETL stands for Extract, Transform, and Load. ETL is a group of processes designed to turn

ETL is a type of data integration process referring to three distinct but interrelated steps (Extract Usage and Latency. Another consideration is how the data is going to be loaded and how will it be The main objective of the extraction process in ETL is to retrieve all the required data from the

sample test case sap testing erp employee verify infotype created

Batch Hybrid Extract, Transform, Load, Transform, Load (ETLTL) is a hybrid strategy. This strategy provides the most flexibility to remove hand coding approaches to transformation design, apply a metadata-driven approach, and All data movement among ETL processes are composed of jobs.

process template map excel matrix plete templates amp

What is ETL? ETL stands for Extract, Transform and Load. In ETL process, an ETL tool extracts the data from different source systems then Full form of ETL is Extract, Transform and Load. It's tempting to think a creating a Data warehouse is simply extracting data from multiple sources

etl flow process diagram intelligence sample support designing roadmap complete figure applications

razorleaf mappings smarteam

process management. ETL is one of the key stages in data processing. It has both methodologies and technologies used in it. The main task of an ETL developer, or a dedicated team of developers, is to: Outline the ETL process, setting the borders of data processing; Provide system architecture for each element and the whole data pipeline

ETL Test Scenarios are used to validate an ETL Testing Process. The following table explains some of the most common How to Perform ETL Testing Performance Tuning? The mapping document has details of the source and target columns, data transformations rules and the data types, all

ETL means the Extract, Transform, and Load process of collecting and synthesizing data. Documenting requirements and outlining the ETL process. Creating models to describe the data extraction taking place during ETL. Related Reading: How to Improve Your ETL Performance.

This manual explains how to use SAS ETL Studio to do the following tasks: 3 specify metadata for data sources This manual also summarizes how to set up servers, libraries, and other resources that SAS ETL Studio SAS ETL Studio provides N-tier support for processes that ow across multiple

What You Will Learn: ETL (Extract, Transform, Load) Process Fundamentals. By going through the mapping rules from this document, the ETL architects, developers and testers should have a good understanding of how data flows from each table as dimensions, facts, and any other tables.

Develop Modules. ETL: Extract Transform Load. This tutorial shows you how to create and use a simple ETL within a single database. Naming & Documenting JavaScript APIs. How to Generate JSDoc. JsDoc Annotation Guidelines.

04, 2020 · Document ETL Process. There are some ETL requirements that are necessary to streamline the data process. It is important that you create external documentation carrying all the steps and data maps for each configuration. These data maps should have graphs, including source data, destination datasets, and summary information for each step of the ...

ETL processing may be viewed as a processing framework for managing all the data within an organization and arranging it into a structured form. In shorter words, ETL is the process of converting information from disparate sources and in a variety of formats into a unified whole.

A Technical Design Document (TDD) is written by the development team and describes the minute detail of either the entire design or specific parts of it, such as:. The signature of an interface, including all data types/structures required (input data types, output data types, exceptions) Detailed class models that include all methods, attributes, dependencies, …

ETL (Extract, Transform, and Load) is essentially the most important process that any data goes through as it passes along the Data Stack. This is followed by changing the data suitably or Transforming the data. The final step is to load the data to the desired database or warehouse.

, Load, Transform (ELT) is a data integration process for transferring raw data from a source server to a data warehouse on a target server and then preparing the information for downstream uses.

,_transform,_loadIn computing, extract, transform, load (ETL) is the general procedure of copying data from one or more sources into a destination system which represents the data differently from the source(s) or in a different context than the source(s).The ETL process became a popular concept in the 1970s and is often used in data Data extraction involves extracting data from …

Find out how ETL and its process assist your business in making sense of that data. How to Improve ETL performance? So, we are aware of the fact that the ETL process, once planned properly, provides good It queries different types of data, such as documents, relationships, and metadata.

ETL (Extract, Transform, Load). ETL is a process that extracts, transforms ETL, which stands for extract, transform and load, is a data integration process that combines data from multiple data sources into a single, consistent data store that is loaded into a data warehouse or other target system.

The ETL process stands for Extract Transform and Load. ETL processes the streaming data in a very traditional way. The main characteristic of the Extract Transform Load(ETL) process is that data extraction, data transformation and loading stages to the Data Warehouse can run in parallel.

04, 2022 · ETL Process Flow. A standard ETL cycle will go through the below process steps: Kick off the ETL cycle to run jobs in sequence. Make sure all the metadata is ready. ETL cycle helps to extract the data from various sources. Validate the extracted data. If staging tables are used, then the ETL cycle loads the data into staging.

lineage

Short for extract, transform & load, ETL is the process of aggregating data from multiple different sources, transforming it to suit the business needs, and However, ETL has now evolved to become the primary method for processing large amounts of data for data warehousing and data lake projects.