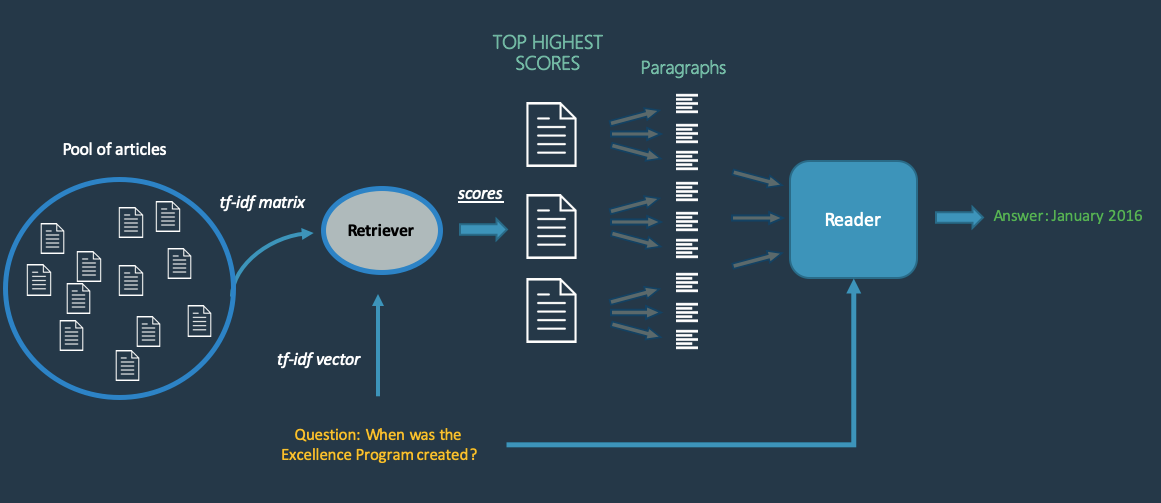

About The Data PipelineProcessing and Storing Webserver LogsCounting visitors with A Data PipelinePulling The Pipeline TogetherAdding Another Step to The Data PipelineExtending Data PipelinesHere’s a simple example of a data pipeline that calculates how many visitors have visited the site each day: Getting from raw logs to visitor counts per day. As you can see above, we go from raw log data to a dashboard where we can see visitor counts per day. Note that this pipeline runs continuously — when new entries are added to the server log, it grabs them and processes the…See more on Reading Time: 9 mins

Learn how to build data engineering pipelines in Python by cleaning and transforming data before safely deploying code and scheduling complex dependencies. Building Data Engineering Pipelines covers new technologies and material, so we recommend that you have a strong understanding of

Using generators to create data processing pipelines in Python. You can use them to create very efficient data processing pipelines. In this article, I will show you three practical examples. We will see how to string together several filters to create a data processing pipeline.

Below, learn how to build an ETL pipeline in Python and transform your data integration projects. Read more: Top 6 Python ETL Tools for 2021. An ETL pipeline is the sequence of processes that move data from a source (or several sources) into a database, such as a data warehouse.

How to insert data into a table manually How to query and fetch data from the tables Ready to start building your data pipeline in Python? Proceed to Defining your first table now!

In this article I'll talk about how to process a collection of items in Python through several steps with relative efficiency and flexibility while keeping your code You just learned the basics of implementing a data pipeline in Python using iterators, congrats!!! No less, you managed to cope with my

Wondering how to write memory efficient data pipelines in python. Working with a dataset that is too large to fit into memory. Hope this article gives you a good understanding of how to use generators to write memory efficient data pipelines. The next time you have to build a data pipeline to

answering question python easily own system create mechanism pipeline

In this article, you will learn how to build scalable data pipelines using only Python code. Despite the simplicity, the pipeline you build will be able to scale to large amounts of data with some degree of flexibility.

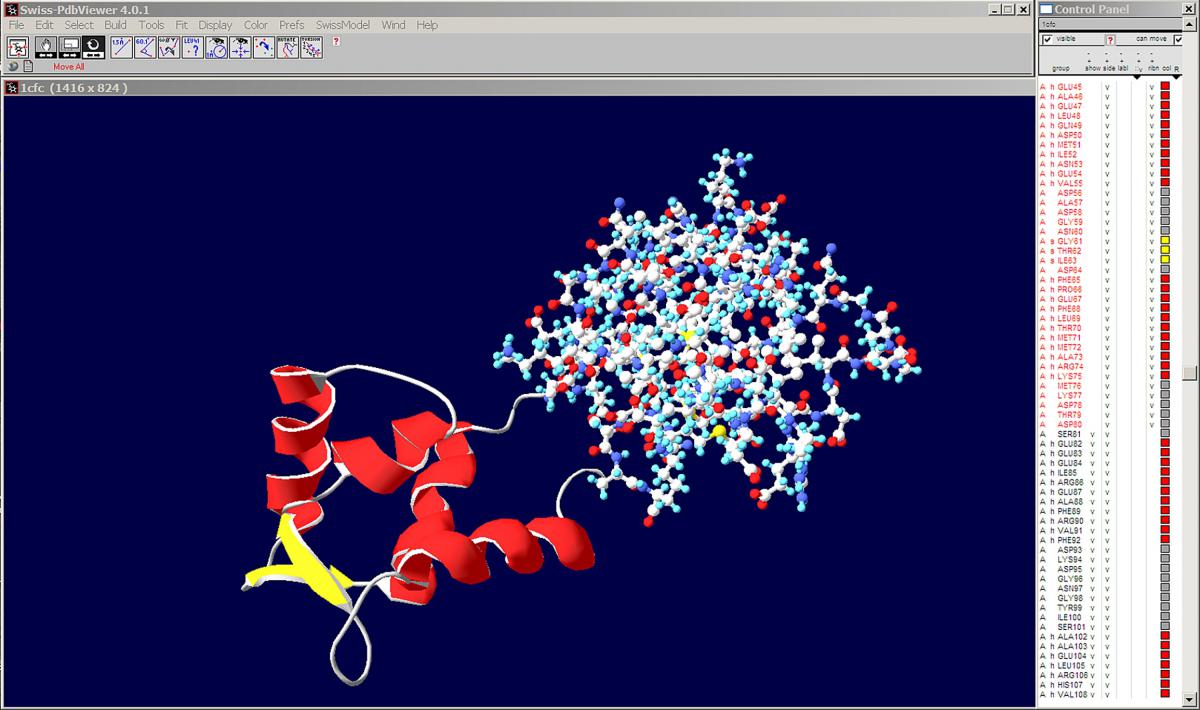

protein pdb bioinformatics software viewer visualization analysis tools macromolecules open computer 1cfc displaying figure medical

The pipeline data structure is interesting because it is very flexible. It consists of a list of arbitrary functions that can be applied to a collection of objects and produce a list of results. A lot of Python developers enjoy Python's built-in data structures like tuples, lists, and dictionaries.

batch pipeline data azure python factory run microsoft docs activities toolbox drag expand surface activity designer service box

10, 2022 · Python celebrated its 30th birthday earlier this year, and the programming language has never been more popular. With the rise of data science and artificial intelligence, Python is still the go-to choice for data engineers everywhere, including those who build ETL However, building an ETL pipeline in Python isn't for the faint of heart. You'll …

Introduction: A data pipeline is a sequence of steps in data preprocessing. Data pipelines allow you to use a series of steps to convert data from one The speed at which data passes through a data pipeline relates to three factors: Rate or throughput is how much information a pipeline can

ProblemGenerators IntroductionBuilding The PipelineSummaryLet’s look at the following demands for a data pipeline: How would you approach to solve such a problem? What I’d try to do at first was writing a function for each of those “building blocks”, and then running each of those function in an endless loop of user input, and just re-organise the functions in the order needed for the pipeline. This approach has 2 obvious pr…See more on Reading Time: 6 mins

Understanding how data is passed between components. When Kubeflow Pipelines runs a component, a container image is started in a Kubernetes The following sections demonstrate how to get started building a Kubeflow pipeline by walking through the process of converting a

In this sklearn pipeline tutorial you learn to create your own pipeline with custom transformations and a classifier. Pipelines are a great way to apply sequential transformations on your data and to feed the result to a classifier. how to. In this blog post, we'll make a treemap in Python using Plotly Express.

Flexible: Pypeln enables you to build pipelines using Processes, Threads and via the exact same API. Notice that here we even used a regular python filter, since stages are iterables Pypeln integrates smoothly with any python code, just be aware of how each stage behaves.

You can use Azure Pipelines to build, test, and deploy Python apps and scripts as part of your CI/CD system. After you're happy with the message, select Save and run again. If you want to watch your pipeline in action, select the build job. You just ran a pipeline that we automatically created for

24, 2020 · Introduction: A data pipeline is a sequence of steps in data preprocessing. Data pipelines allow you to use a series of steps to convert data from one representation to another. A key part of data engineering is data pipelines. A common use case for a data pipeline is to find details about your webAuthor: Tom RobertsonEstimated Reading Time: 4 mins

So, in general, what would be a recommended industry-way of building data pipelines in terms of code/structure? Do you test your data pipeline with regular python tests? or use the pandas dataframe testing functions? Do you create a test csv (including all the possible cases the code

Building An Analytics Data Pipeline In Python.

In this tutorial, you will build a data processing pipeline to analyze the most common words from the most popular books on Project Gutenberg. Follow How To Install Python 3 and Set Up a Local Programming Environment on Ubuntu to configure Python and install virtualenv.

pipelines python avaxhome

Hevo Data, a No-code Data Pipeline helps to load data from any data source such as Databases, SaaS applications, Cloud Storage, SDKs, and Streaming Services and simplifies the ETL process. It supports 100+ data sources (including 40+ free data sources) and is a 3-step process by

plant cells microscope stem under electron section cell cross labeled tubes animal macro scad root microscopic pipeline generation edu sfdm

to30%cash back · Building Data Engineering Pipelines in Python. Learn how to build data engineering pipelines in Python. Start Course for Free. 4 Hours 14 Videos 52 Exercises 16,209 Learners. 3950 XP. Create Your Free Account. Google LinkedIn Facebook. or. Email Address. Password. Start Course for Free.

My mission was to build a pipeline from scratch - starting with retrieving weather data from the OpenWeatherMap current weather web API, parsing the data using Pandas (python data analysis) library and storing it in a local SQLite database.

How to Build a K-Means Clustering Pipeline in Python. Your gene expression data aren't in the optimal format for the KMeans class, so you'll need to build a preprocessing pipeline.

dhruv govil pluralsight

15, 2020 · Let us understand how to build end to end pipeline using Python. Go through these videos to learn more about Pycharm, Git as well as setting up and

Query, group, and join data in MongoDB using aggregation pipelines with Python. First, I'll show you how to build up a pipeline that duplicates behaviour that you can already achieve with MQL queries, using PyMongo's find() method, but instead using an aggregation pipeline with $match, $

Building the input pipeline in a machine learning project is always long and painful, and can take more time than building the actual model. Former data pipelines made the GPU wait for the CPU to load the data, leading to performance issues. Before explaining how works with a simple

In this post you'll learn how we can use Python's Generators feature to create data streaming pipelines. For production grade pipelines we'd probably use a suitable framework like Apache Beam, but this feature is needed to build Apache Beam's custom components of your own. The Problem.

10, 2021 · The first step in the python ETL pipeline is extraction, , getting data from the source. Then, the data is processed in the second stage, known as Transform. The final stage of the data pipeline framework is Load which involves putting the data to the last point.

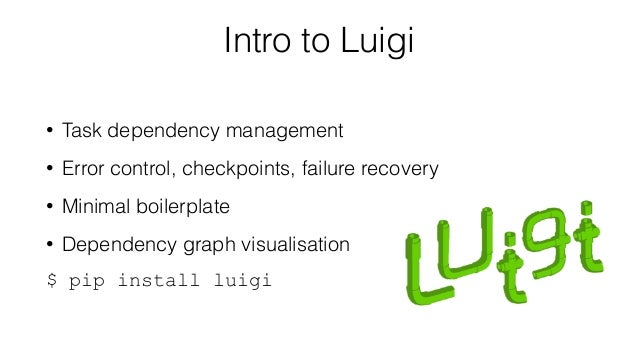

Another important aspect to consider is how to resume a pipeline. For example, if the first few tasks are completed, but then an error occurs half-way We have described the definition of data pipelines using Luigi, a workflow manager written in Python. Luigi provides a nice abstraction to define

In this video, we ingest, transform, and output data in a very simple data pipeline. The things we learn in this video can easily be applied to

12, 2020 · Download the pre-built Data Pipeline runtime environment (including Python ) for Linux or macOS and install it using the State Tool into a virtual environment, or; Follow the instructions provided in my Python Data Pipeline Github repository to run the code in a containerized instance of JupyterLab. All set? Let’s dive into the Reading Time: 8 mins

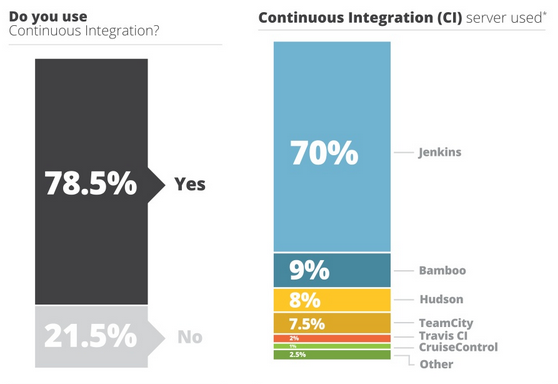

ci java pipeline cd devops landscape continuous tools technologies delivery tutorials integration

Learn how to build an end-to-end machine learning pipeline for a sales forecasting problem. Understand the structure of a Machine Learning Pipeline. Build an end-to-end ML pipeline on a real-world data. You can do this easily in python using the StandardScaler function.

Transformers are usually combined with classifiers, regressors or other estimators to build a composite estimator. Pipeline is often used in combination with FeatureUnion which concatenates the output of This is useful as there is often a fixed sequence of steps in processing the data, for

Data pipelines allow you transform data from one representation to another through a series of steps. Data pipelines are a key part of data engineering, which we After this post, you should understand how to create a basic data pipeline. In the next post(s), we'll cover some of the more advanced

tech mern towardsdatascience js react

Python is used in this blog to build complete ETL pipeline of Data Analytics project. We all talk about Data Analytics and Data Science problems and find Here in this blog, I will be walking you through a series of steps that will help you understand better about how to provide an end to end solution

azure ml pipelines learning pipeline machine build experiment sdk microsoft steps diagram create service workflows repeatable docs step python through

pipeline script python execute aws data export

1 — Installing LuigiStep 2 — Creating A Luigi TaskStep 3 — Creating A Task to Extract A List of BooksStep 4 — Running The Luigi SchedulerStep 5 — Downloading The BooksStep 6 — Counting Words and Summarizing ResultsStep 7 — Defining Configuration ParametersConclusionTo complete this tutorial, you will need the following: 1. An Ubuntu server set up with a non-root user with sudo privileges. Follow the Initial Server Setup with Ubuntu 2. Python or higher and virtualenv installed. Follow How To Install Python 3 and Set Up a Local Programming Environment on Ubuntu to configure Python and install virtualenv. You’ll se…See more on Interaction Count: 1Published: Feb 04, 2021

pipelines

techrepublic

Language Processing: Cleaning The TweetsCalculating SentimentMain MethodResultsConclusionFull Python CodeIn my last post, I discussed how we could set up a script to connect to the Twitter API and stream data directly into a database. Today, I am going to show you how we can access this data and do some analysis with it, in effect creating a complete data pipeline from start to finish. Broadly, I plan to extract the raw data from our d…See more on : Daniel FoleyPublished: Jan 03, 2021Estimated Reading Time: 10 mins